AWS event driven service

Hey everyone,

Few weeks ago I was talking about Logicless invoked AWS Lambda with Metadatas. Logicless means for me a way to implicitly build a logic between different part of a service through event. If you don't know how AWS is working I am inviting you to read how I was able to invoke a Lambda with SNS and S3.

I decided to dig deeper into this subject since I am currently working on a project where I have a limited amount of time to realize it and I tried to optimize the way I structured it. Obviously this is an event driven service, it's not yet finished but it does work well so far.

Use AWS events

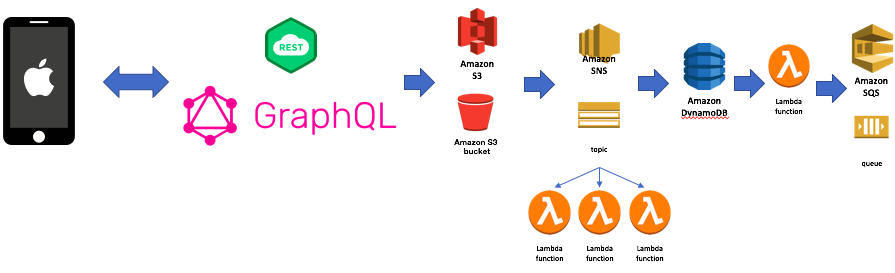

In my opinion when you are working with 1 or n application the current rules is to design a service that will serve those applications. Most of the time this will be made with a REST or GraphQL API. You will define an entry point and routes and you will be able to create, read, update or deletes resources. However I think this is definitely not a mandatory. I am building a system where I still need to handle images resources process them and bring it back to my external application.

Ok with this, that's great you have auth, control and you can add more logic and constraints. You can really filter your inputs and make exactly what's planned to do. However I think you can use AWS authentication + event layer to empower the way you are dealing with your input and make them work as an API. That's why I am delighted to use AWS events

I could have designed this, this way:

API and event driven way

This way it's our API which is handling the auth, it will also add another layer of input control. Data uploading into S3 bucket will be handle through the API. Then other options such as logging data, processing them, create queue from DynamoDB streams will be handled from AWS event as chained reaction.

API centric driven way

The API centric way is exactly the same as above out of every operation will be handle from the application and through the API. In this case auth is handled from API, all kind of order are managed from API there is no intrinsic logic between parts of the service.

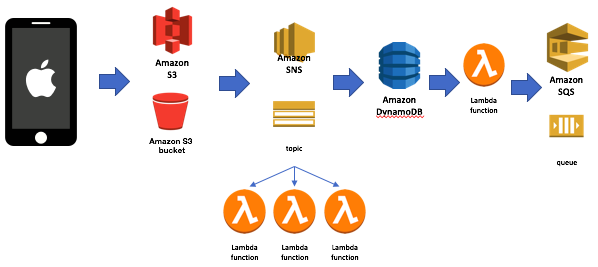

AWS event driven way

The event driven way is exactly what I designed lastly. It does not add an auth on top of the AWS one (IAM/Cognito ID) with an API (less control) it directly put data into bucket and the chained process si triggered with AWS events.

How to use AWS events

The following will be not a lesson or a course about how you can use AWS events in all ways. I'm sure there a huge variety of way of using them. Nevertheless I'll try to show how I managed to use them to create a real organized flow with AWS event and S3, DynamoDB, SNS, SQS.

My entry point is a bucket where IAM policy let people put data in it only for authentication (this is made on front side from my external app) users. First of all I attached an event ObjectCreated:Put in my S3 Bucket. Each time a file is put into the bucket it will trigger an event. See How to trigger Lambda with SNS for more information. Why am I using SNS ? Actually I could directly call a Lambda if I'm sure there will be only a single function called. However If I plan to call extra Lambda in the future from the same event I'll have to use a SNS topic with various subscription. In other word that's just a way to anticipate the architecture.

From your Lambda you'll have to parse your event and work with it. For example when you are working with SNS and where the source is an S3 you'll have your S3 record wrapped into your SNS record.

{

"Records": [{

"EventSource": "aws:sns",

"EventVersion": "1.0",

"EventSubscriptionArn": "EVENT_ARN_NUMBER",

"Sns": {

"Type": "Notification",

"MessageId": "",

"TopicArn": "",

"Subject": "Amazon S3 Notification",

"Message": "JSON.stringify(S3_RECORD)",

"Timestamp": "2017-04-01T14:00:53.511Z",

"SignatureVersion": "1",

"Signature": "",

"SigningCertUrl": "",

"UnsubscribeUrl": "",

"MessageAttributes": {}

}

}]

}

Your Lambda represent the logic. In my case I logged my files into DynamoDB and created several objects/rows in various table. Once this is done I designed another Lambda an started to attach a DynamoDB stream to it.

DynamoDB stream are pretty goods it works almost exactly the same way as an S3 event is working. Actually with DynamoDB stream you can listen to all kind of event a table emit (INSERTION, MODIFICATION, DELETE). Also each event has a special format and when you are defining the trigger you can define format see below:

- KEYS_ONLY—only the key attributes of the modified item.

- NEW_IMAGE—the entire item, as it appears after it was modified.

- OLD_IMAGE—the entire item, as it appeared before it was modified.

- NEW_AND_OLD_IMAGES—both the new and the old images of the item.

More info from stream DynamoDB documentation there:

http://docs.aws.amazon.com/en_en/amazondynamodb/latest/developerguide/Streams.html

Basically I always work with the NEW_AND_OLD_IMAGES format. This let you to compare a MODIFICATION and make your criteria more accurate. For example when you are looking for the length (from 9 to 10) of a specific item in the row and this event should be unique, you can parse the event and check from the OLD_IMAGE that before MODIFICATION there was like 9 items in the array and now 10 from the NEW_IMAGE. This way you can trigger Lambda on a specific emitted event with accuracy.

So basically what I have done from DynamoDB stream, I set specific criteria calling my Lambda each time there are modifications, if criteria mismatch do nothing otherwise please do the job. The next step is nothing more than generating items into a fifo queue. Again I never put in my previous Lambda while inserting the 10th item to create a queue. However seamlessly this will be made with another Lambda from the DynamoDB stream. That's power of event driven Lambda on AWS and I like it .

Final point of this is once my fifo queue has data, there are an alarm based on the number of items currently in queue. This will automatically set up EC2 instance from EC2 spot. On those instance I have a Node.js program which is allow to read my queue and consume every item from the queue.

Thanks for reading I hope you enjoyed this article.