How rethinking your system design can make you save money

Hey folks,

Since a couple of months. I'm working on a CRM-like SaaS product. I've done like 80% of the projects and when you are doing the remaining bytes, there are always concerns or questions hard to answer. In that post I'll illustrate which problem I've faced, what was my thinking journey and how I solved it.

Intro

What my CRM-like SaaS is actually doing ?

It's actually pretty simple: The idea is that if you have a phisical store you might ended up doing the excel sheeting thingy to take orders in your shop or by phone. Do your stuff and then your clients are comming picking up your stuff. What I wanted to to is to enhance that experience for both the merchant but also for your customers. You come up with a well crafted and intuitive solution to take your client's orders and they are getting a text message on order confirmation and/or a reminder to pickup their order.

What was the technical issue?

So there are 2 type of notification:

- Confirmation notification which is being sent right after the order is registered by the merchant

- Reminder notification which is being sent X days before pickup

Then this is on the reminder notification where things are getting a little bit tricky as for the confirmation you just have to send it immediately while creating the order on the first place. How would you do if you want to execute a specific handler at a given time.

To me it rings some design bells: delayed queuing or cron.

Don't know why but I took the queuing option initially versus the cron one. Actually cron in my mind make a little bit more sense to something that will be triggered on a regurlarly basis, even if it would work perfectly it you wanted to trigger something only once.

Once I took queuing there was the thing about is it critical to make sure no items is being lost or that's fine we can resume them in some way.

So if it's critical, I presume any queuing system out there would work suck as SQS, Kafka and RabbitMQ. However for the level of usage I'm having I found theses solution a little bit overkill. In the end I came up with a bullmq solution which is basically a queuing system with all mechanics you might need from a queuing system and this is redis based. It means locally and for your DX you simply set up a docker container with redis and you are ready to go with your queuing system.

I did all of my stuff and that integration looked good. Then I started to look about how I would do that in production. It brought me to look at how I would expose a redis docker container through the internet securely with Traefik. I managed to do something that work for that, but it was not as straighforward as I thought. Here you might ask why I would do that in the first place where there are plenty of solution out there to have a managed redis instance without the pain of self hosting it. You are definitely right, but I find this terribly expensive for what it is, but for sure it's peace of mind and you don't have to take care about the maintenance of that piece (monitoring, upgrades, security and so on).

bullmq solution pro and cons:

pros

- Good developper experience locally through redis + docker

- bull/bullmq is battle tested and a standar in node.js queuing

cons

- self-hosted hosting is not that easy

- managed solutions are not very cheap

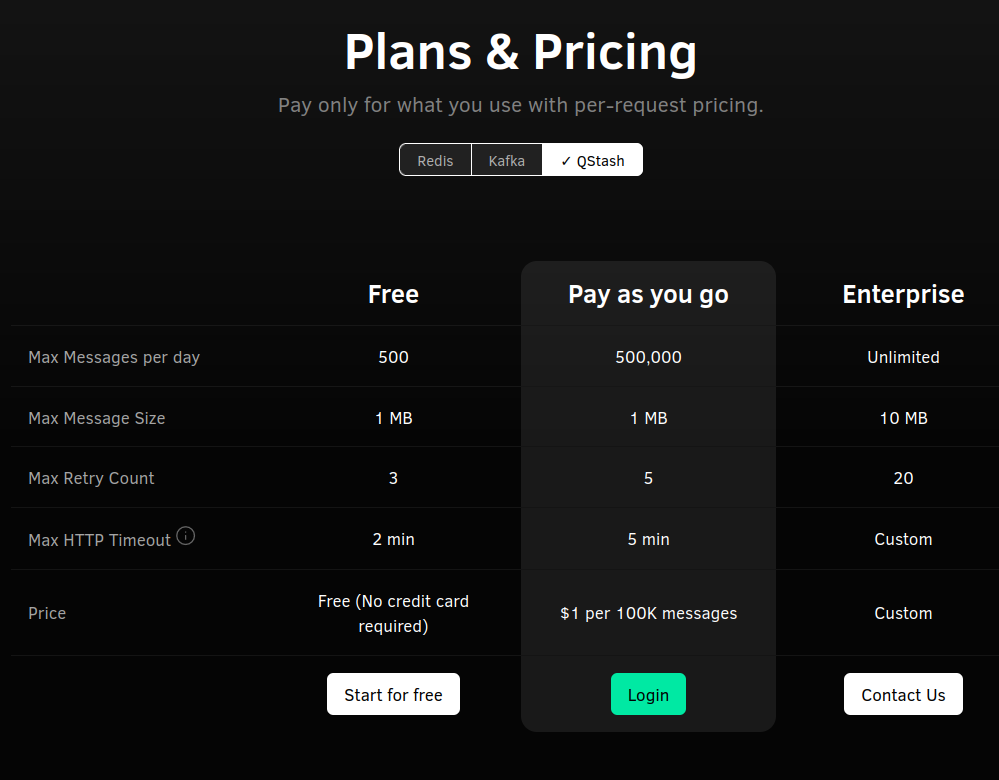

I started wondering could I get rid of that redis piece that way you won't have to either pay for it or self host it. This is what brought me to upstash, which is an amazing product I've heard recently and seems to do the work. Decent free tier, has redis, kafka and proprietary solution called qstash (an http based queuing system).

For a while the redis option from these was an option, but looks like it's not yet fully support with bullmq and another reason it seems using that with bullmq is not super relevant, as bullmq seems to do a lot of operations managing queuings and you might crush your free tier sooner that expected.

I did not wanted to go through the Kafka thingy for the same reason exposed earlier. Then the qstash thing was an option, the only thing I was reluctant is that I did not wanted to lock me into a solution that was not really interchangeable. Indeed, with a bullmq option if you are self-hosted I can go redis managed in a blink of an eye just by changing my redis endpoint. That would not be the case with a qstash option, but nevermind I'm currious let's give a try.

Qstash works well. It actually and api endpoint which will forward to your desired endpoint that's is.

It works that way

curl -XPOST \

-H 'Authorization: Bearer XXX' \

-H "Content-type: application/json" \

-d '{ "hello": "world" }' \

'https://qstash.upstash.io/v1/publish/https://example.com'You set up your route which will represents your consumer and it will receive your message payload.

There is an sdk for doing this, if you don't to send request yourself:

https://www.npmjs.com/package/@upstash/qstash

There is also ways to make to validate who is sending that data with signature validation on your route.

From that you have a "managed" or "serverless" queuing system out of the box up to 500 messages per day for free, then 1$ per 100k message, amazing. Another good thing about there solution is that you have log, with payload and status out of the box. This would let you avoid having to make the extra step of managing, storing visualizing your items.

it looks good, but the exact same concerns I had with my self-hosted solution is exactly the same with Upstash. Obviously for sure in a serverless way I'll get a decent queuing system BUT that an extra piece into my system that I'll be relying on. What if upstash is going down ? What if they is congestion in there system ? etc.. IMHO the less you are dependant on external and critical for your business thing the better it is.

upstash qstahs solution pro and cons:

pros

- Good developper experience locally, you can set up local tunneling with ngrok or anything for the local routing forward to consume your messages

- Decent free tier

- Simple to use sdk

- Reliability

- Serverless aspect (no infra to monitor, manage etc)

cons

- Proprietary solution

- External third party

Finally I came up with another solution I had from the begining, the cron solution. My backend is that way

- Nest.js for the API

- PostgreSQL db with prisma

- Nuxt.js for the frontend

- TailwindCSS for the style

- Supabase for authentication

Nest has a built-in cron system.

The idea is the following:

- Confirmations notification will be triggered with a single executed cron immediately

- Reminders notification will be triggeed with a single executed cron on a given time

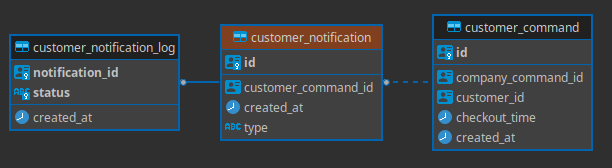

I keep track about all of this in tables here my schema from a customer_command perspective

A command has 1 or n notification with a given type reminder/confirmation, then each notification has their own log with their status queued, sent, received.

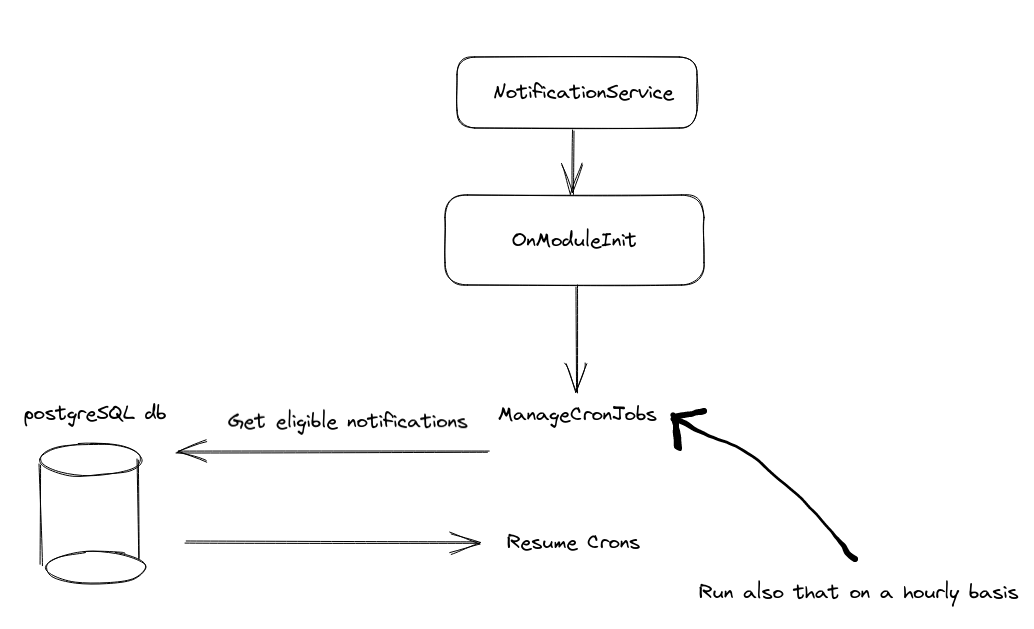

The only tricky point is that the cron system in Nest.js does not have track of the cron in a persisted way, which means if your server is going down or restart you'll lose track of your scheduled crons. The idea was from that point being able to resume theses cron from pg.

That system is pretty simple, actually on module initialization by default no schedule will be set so just fetch all customers notification which are queued but not sent yet. Once you have done that go through the existing scheduled cron and re-schedule them if there are not there (all of them in case of init). The other part would be to cron run that on a let's say hourly basis. Then from that point you'll have a fully resumable "scheduling" system for notifications.

In house nest.js cron-based solution

pros

- Good DX

- Integrated to your existing backend

- No third party or extra infrastructure required

- Cheap solution

cons

- More boilerplate to implement and track your system

I hope you liked the way I presented things and I always find that interesting to test multiple thing to find the right fit based on your need. It also helps to discover new product such as upstash for exemple and it show you how it could be interesting to use for other project.