How I am securely self backing up my PostgreSQL database

The other day, I was looking for an old replacement solution to dump my database across the internet securely. I needed it to run on a daily basis, safely, isolated and transfer and store data securely in the cloud.

I came across that:

It inspired me , however the way it was implemented was not fitting exactly what I was looking for so I rewrote it based on my need and came up with something like that.

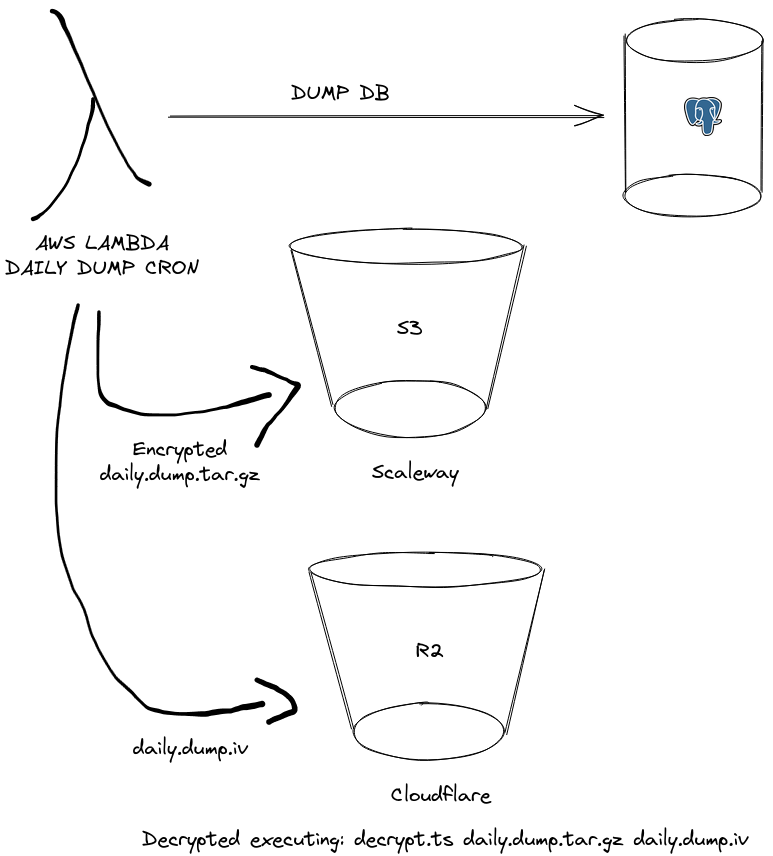

The workflow is the following:

- Kicking off a function in AWS Lambda on a daily basis cron(0 3 * * ? *) this is the cron expression I used in serverless framework

- Create the dump archive and generate a key and iv as I was targeting AES-256-GCM encryption

- Sent out archive at Scaleway S3 api compatible Object Storage solution and the iv file with the key alongside the way you want (through the filename, or metadata etc..) to R2 the s3 object storage alternative by Cloudflare.

- Use a simple utility to decrypt your ciphered file.

#!/usr/bin/env node

import * as fs from "fs"

import { Command } from "commander"

import { basename } from "node:path"

import { createDecipheriv } from "node:crypto"

const program = new Command()

program

.version("0.0.1")

.description("Decrypt an AES-256-GCM encrypted file")

.arguments("<encryptedFile> <ivFile>")

.action(async (encryptedFile: string, ivFile: string) => {

const iv = fs.readFileSync(ivFile, "utf8")

//change key retrieving accordingly to the way you are passing it

const key = ivFile.split("_").pop().split(".").shift()

const decipher = createDecipheriv(

"AES-256-GCM",

Buffer.from(key, "hex"),

Buffer.from(iv, "hex")

)

const fileBasename = basename(encryptedFile)

const input = fs.createReadStream(encryptedFile)

const outputPath = encryptedFile.replace(

fileBasename,

`unencrypted_${fileBasename}`

)

const output = fs.createWriteStream(outputPath)

input.pipe(decipher).pipe(output)

console.log(`Decrypted file written to ${outputPath}`)

})

.parse(process.argv)

Can be run that way for instance:

npx ts-node decrypt.utility.ts '/home/foo/2023-05-17.backup.gz' '/hom /home/foo/backup_2023-05-17_your_key.backup.iv'Pros:

- You can build something customized

- Your data is encrypted and never go through the internet unencrypted

- AWS Lambda offer you isolation and consistency for recurring and scheduled triggers

- You can scale your function endpoint with multiple triggers and different events. Basically 1 event = 1 database to dump

Cons:

- You have to maintain your backup mechanism

- Need to monitor failed or interrupted backup system

- Lambda has some limitation (15 minutes max execution time) it might not work if dumping your db takes too much time to dump

- Meanwhile you increase the complexity to get all info in order to decrypt your data, you are exposing to more service which is in a way a bigger exposure to threats